By Terry Paullin & Joel Silver

The age-old adage “Bigger is Better” has been debated ad infinitum. I get on the PRO side when the topic is automotive engine cubic inches, the size of a slab of B-B-Q ribs or light output from a Home Theater projector.

I’m on the NAY side of that same assertion when the topic is number of girlfriends in the same room, inch-columns of print media given to the antics of the Kardashians and the number of primary colors some display manufactures use beyond Red, Green and Blue.

The “bigger is better” argument, taken to a current hot topic in OUR world (HDMI cable bandwidth) is definitely a resounding YES!

You may have read elsewhere, as we did, from some otherwise credible Industry “Experts” that most any decent (read that not out of the bargain bin) HDMI cable will see you through the current crop of UHD TVs, and that the notion that you might need something new, was “Nonsense”.

Folks, that statement is, well … NONSENSE!

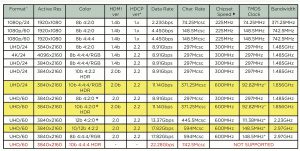

Begin to digest the chart below.

Before we get into the “whys” of the reason this bad advice should go unheeded, there are some fundamentals of not just HDMI cables but ALL cables you should be aware of. These aren’t A/B comparisons or some subjective observations from a sample size of three – these are immutable facts of cable physics.

In the cable world, there is a thing called distributed capacitance. In a simple coaxial cable, it is the capacitance between the center conductor and the shield. This capacitance is “distributed” all along the length of the cable – longer cable, more “C”. This “C” is also heavily influenced by the “Impedance” or insulating strength of the dielectric (material between wire and shield). The culprit “C” here will shunt the strength of the original signal.

Yes, it’s more complex when there are 19 wires bundled into one cable (HDMI), but the principals are the same. And, yes, bits are just 1’s and 0’s, but as these bits have to travel closer and faster down the line, some strange things can happen. There is attenuation (lower voltage) of the original signal (due to “C”), external noise injection, a very weird phenomenon called “return loss” and a host of other high frequency anomalies. Many of these can be minimized by careful manufacturing techniques controlling wire size and spacing, dielectric thickness and material. Read those as added cost!

A comprehensive description of these effects as a function of frequency would require a tomb thicker than the magazine you are holding and would put you to sleep quicker than an Adam Sandler movie. All we want you to take away from this mini-treatise is the idea that as more and more data has to move faster and faster down a cable, a different kind of physical behavior has to be dealt with or your image will not get from UHD player to display unmolested!

So why the sudden need for speed? Now comes the TV you asked Santa for. If it is UHD Alliance approved, it’s likely to have;

4 times as many pixels as its predecessor

Frame rates to 60 fps

Enhanced bit depth and at least a doubling of color resolution (4-4-4 12-bit color requires far more bandwidth than your decades old 8-bit Rec 709 SDR HDTV)

and, oh yeah, HDR … Much more on that later

The good news is that a Wider Color Gamut comes with no bandwidth premium!

All of these features require sending more bits per second than your current set. Said differently, your HDMI cable that worked fine dealing with 24 frame 2K may or may not (…uh, probably not) deliver the goods to a 60 frame 4K set with 10-bit or 12-bit 4:4:4 HDR. Due to the variances in manufacturing tolerances, even two packages with the same skew off the shelf at Best Buy might give you one that does and one that doesn’t put the right image on your screen.

If your system is connected with category cables and expanders, you are out of the UHD/HDR game entirely until another generation of chipsets arrives for next generation with expensive extenders using yet a new compression trick. Your CAT pipeline is currently limited to 10.2 Gbps and even 24 frame rate content is 13.35 Gbps. If we take bit depth down to 10-bit the signal is still 11.14 Gbps which breaks the HDMI 1.4 “bank” so your system still fails to deliver HDR. Review the above chart again – pay attention to the data rate column.

At the ISF, we get reports daily from calibrators and install techs chronicling set-up troubles in the field. Recently, we’ve seen a near epidemic of UHD Blu-ray HDR picture delivery failures, which leads us to…

…. A funny thing happened to my HDR on the way to my TV.

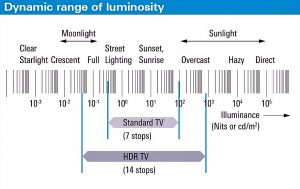

HDR is arguably the “hottest” of the new features being offered to us. As you can see from the chart below it has a vastly higher luminosity range – each single “F stop” improvement is actually doubling the luminosity or a 2X improvement!

While none of these things are particularly “funny”, especially to the client who paid for an HDR capable TV and an HDR source signal and gets neither on screen. The problems currently happening in the field include a wide range of multiple failures occurring in the same system. Reported “fails” include:

UHD TV on screen set-up menu not configured to accept HDR signal.

UHD BD player’s set-up menu not configured properly to send HDR.

Systems video pipelines force player to send an 8-bit component SDR 4:2:0 signal instead of 12-bit RGB 4:4:4 HDR signal to sync the connection – remember, making a picture happen is HDMI’s job one!

The HDMI cable is not in the correct TV or AVR input. Not all inputs in all 4K sets and AVRs are capable of full UHD functionality – expect cost budgets to make that a reality for quite some time.

Flat panel or projector’s inputs cannot handle the bandwidth.

AVR bandwidth is insufficient in all the inputs.

Matrix switcher has insufficient bandwidth.

No HDMI signal analyzer available on site to see which box or cable failed.

Major retailer showrooms are showing HDR failures.

Of course, the chipset that sends the data stream and the chipset that receives and decodes the data stream could be outdated and therefore culpable!

A Tip from Terry: At the risk of offering a self-serving tip, insuring maximum HDR delivery is not for the meek or well intended DIY aficionado. Hire a competent installer or calibrator who has the right test equipment.

Terry has a bin of various length HDMI cables from various manufactures, all alleging to be “premium” quality. We tested two dozen of these with the help of a Murideo signal generator on one end and a Murideo video analyzer on the other. Only one 6 footer achieved 18Gbps performance. Another test set of two dozen may well have achieved zero good guys and our bet is that NONE over 9 feet would pass.

Notice the HDR fail on the Murideo readout below.

Bottom line is we can’t even get to “fully spec’ed HDR” (10,000 or 4,000 nits) on any consumer set yet. The highest measurement circa 2016 was a little over 1500 nits, and 600 to 1,000 nits are common maximum readings. Also, 4K 60 4-4-4 10-bit HDR is not yet supported. Don’t be surprised to see the 18Gbps anti be raised in upcoming HDMI documents.

Such is the price for a best-that-it-can-be “Immaculate Image”.

The amazing good news, and the very reason for this column’s existence, is that when I personally saw HDR/WCG high-bit depth pictures on my own installs, I was downright awestruck!

If you get it right, this is a quantum step forward and by a huge margin the very best consumer TV pictures of all time!